Another vital component of the project is the “flipped classroom,” a teaching tool that is already widely utilized on campus. The practice reverses the traditional structure in which lectures are done in the classroom and problem-solving is done in the library or dorm room. Steven records his lectures and makes them available to students online—where they can be watched, paused, and revisited anytime. Class time is now spent working individually and in groups, with Steven there and available for questions.

“I’ve found that watching students solve problems right in front of me, and sharing suggestions or strategies in real time, is a more effective use of my skills,” he says. “It also helps with students who get it and have advanced questions.” This way, he’s also able to field any questions or issues that come up with the new Polar CGI component of his curriculum.

In order to explore the combined impacts of polar data, CGI, and flipped classrooms on student learning, Steven and Lea need to evaluate the project’s results. “All the components of this project have been established as sound pedagogy, but the combination hasn’t been tested,” Steven explains. “So we’re aiming to evaluate how this works: Do students learn differently in such an environment? Does the method attract students who may not otherwise have been attracted to the sciences?”

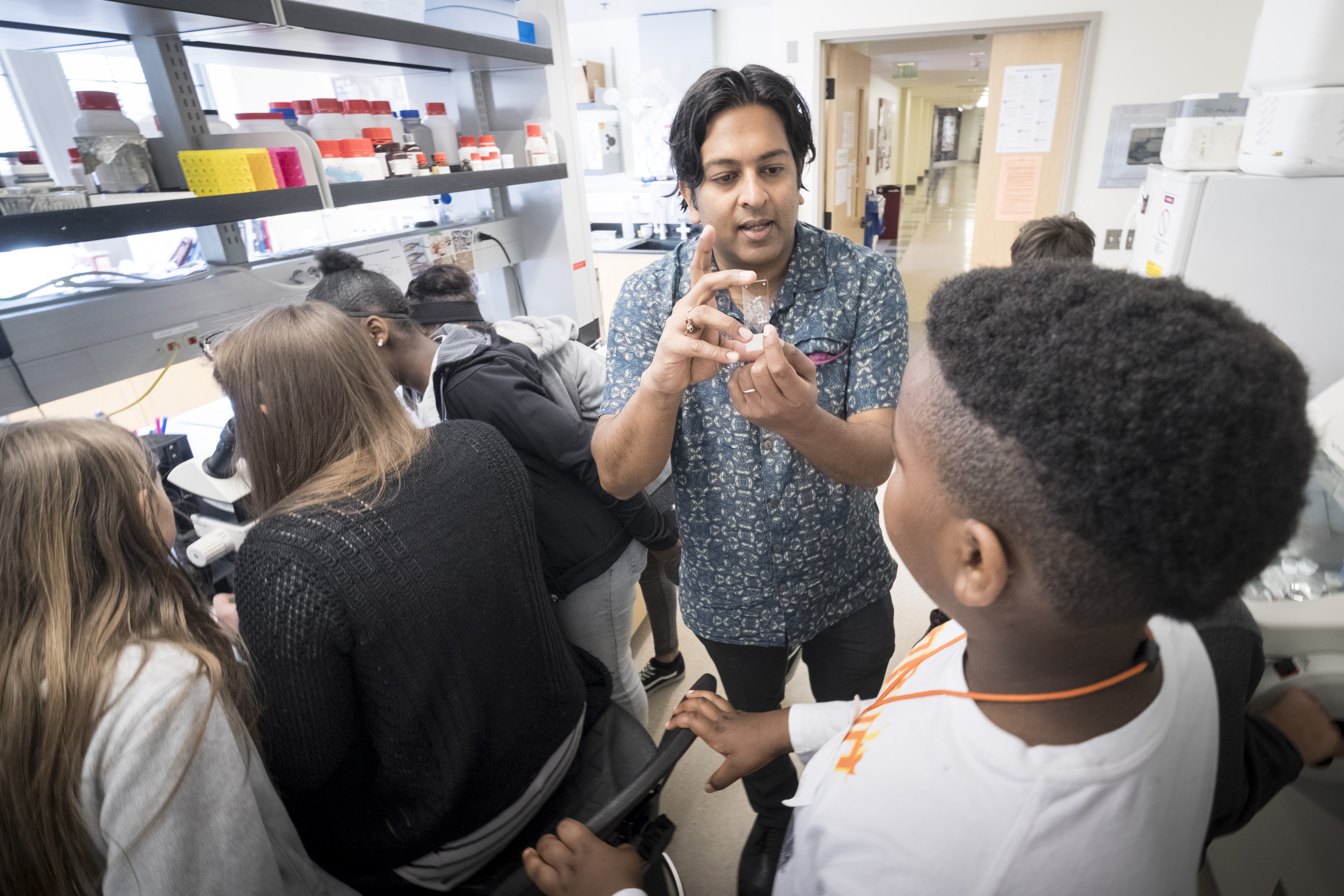

The team knew that they didn’t want to write an exam to simply test knowledge. That wasn’t the point, since these modules within classes are designed to get students to engage with the data, to critically analyze it—to think. What kind of evaluation tool could show that a chemistry, economics, or graphics student is actually engaging with the information unique to their class?

Aedin and Max brought a valuable student perspective to the evaluation process. “We really wanted to focus on getting students to figure out how to ask questions,” Aedin says. “Doing real science at advanced levels is more creative and less rigid, and we wanted the questions to reflect that that’s how science actually happens.”

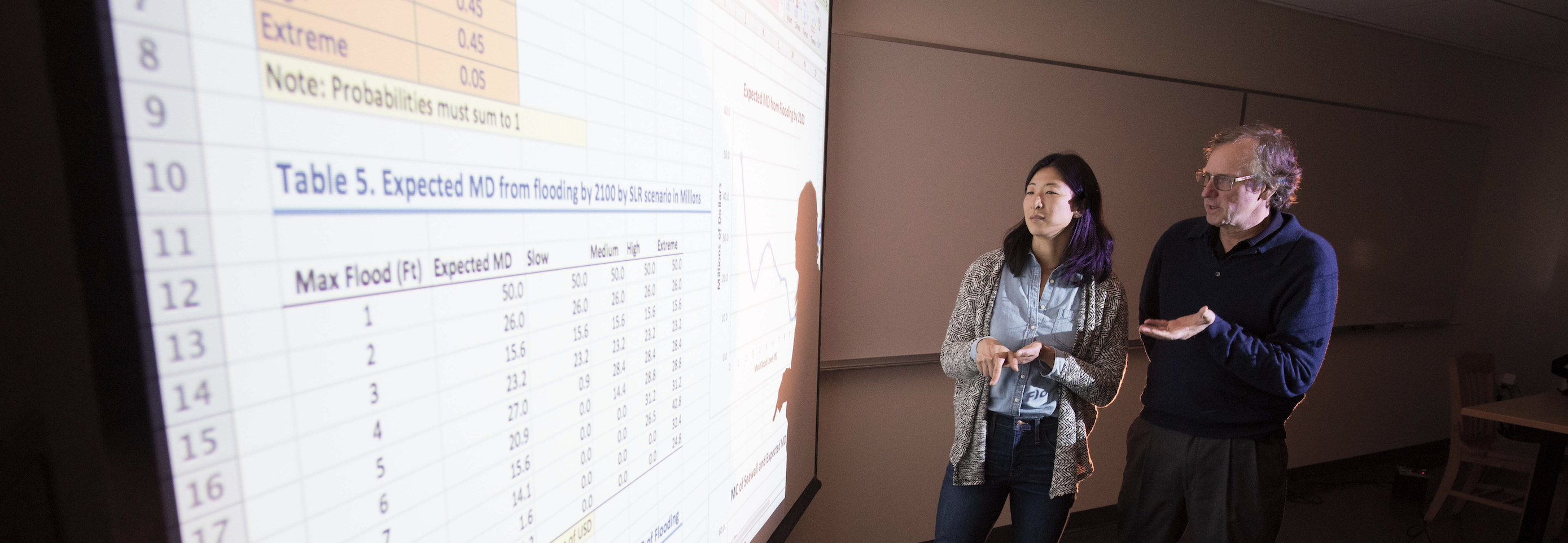

To that end, the team came up with pre-assessment and post-assessment survey queries. In the pre-assessment query, students were asked to look at the polar data particular to the subject they were studying and ask—not answer—questions about it. Then they worked through the Polar CGI module created for their class. In the post-assessment query, students were required to answer the same prompts again, doing as scientists do, which is asking questions and forming hypotheses before and after grappling with data.

How does the team know if they’ve achieved their goal in getting students to think critically, like scientists? “We’re looking for greater complexity in their questions,” Lea says.

The first test run of the evaluation process was a success. “It looked like, over time, the students’ answers became more sophisticated,” Aedin says. This indicated that students were truly engaging with the information. Aedin called the method “really inclusive” since it gets students to ask their own questions rather than answer a professor’s.

Aedin plans to continue working on the design of this process as part of her senior thesis. “I’ll be evaluating the surveys and figuring out how to code the answers,” she says. “I’ll code as many as I get back and hope to be able to quantify the success of the modules.” The external evaluator for the project, Candiya Mann from the University of Washington, will also be auditing the assessment results.

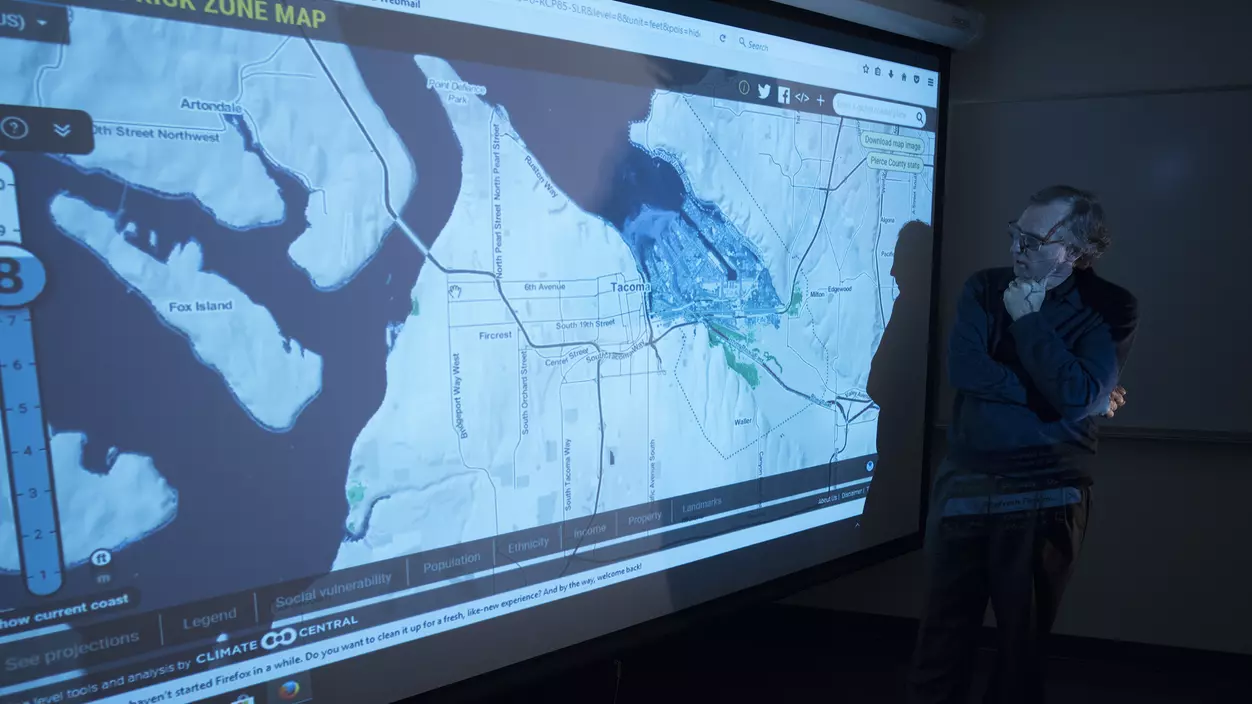

The NSF grant will allow the team to continue developing the Polar CGI modules for two years. They hope to double the number of participating professors in the next academic year, so that they can try it out with a wider range of curricula. The students are certainly hungry for it. “Climate change is something we care about,” says Max, “since we are poised to feel the effects of it within our lifetimes.”